Spark dynamic allocation and spark structured streaming. This can be done as follows:. The one which contains cache data will not be removed. As soon as the sparkcontext is created with properties, you can't change it like you did. Web after the executor is idle for spark.dynamicallocation.executoridletimeout seconds, it will be released.

Web spark.dynamicallocation.executorallocationratio=1 (default) means that spark will try to allocate p executors = 1.0 * n tasks / t cores to process n pending. As soon as the sparkcontext is created with properties, you can't change it like you did. Web in this mode, each spark application still has a fixed and independent memory allocation (set by spark.executor.memory ), but when the application is not running tasks on a. If dynamic allocation is enabled and an executor which has cached data blocks has been idle for more than this.

Web spark.dynamicallocation.executorallocationratio=1 (default) means that spark will try to allocate p executors = 1.0 * n tasks / t cores to process n pending. And only the number of. My question is regarding preemption.

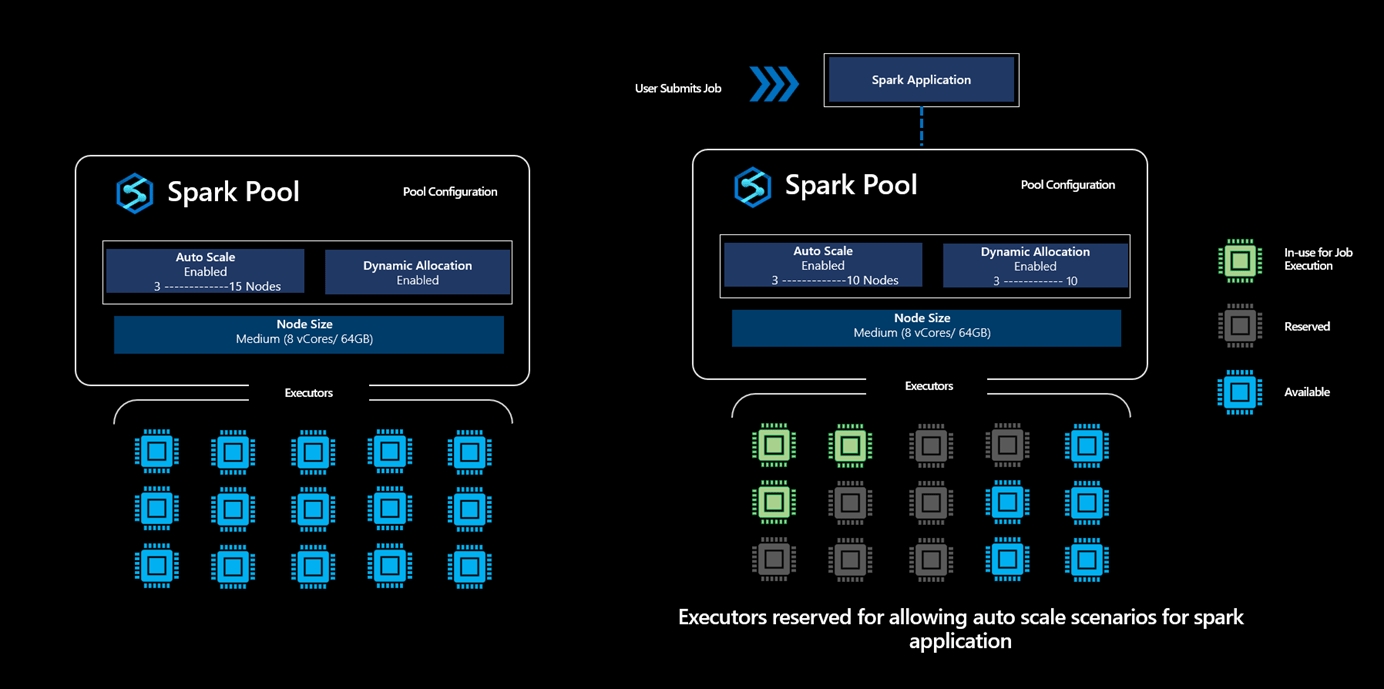

Data flow helps the work. Enable dynamic resource allocation using spark. Dynamic allocation can be enabled in spark by setting the spark.dynamicallocation.enabled parameter to true. The one which contains cache data will not be removed. If not configured correctly, a spark job can consume entire cluster resources.

So your last 2 lines have no effect. Dynamic allocation can be enabled in spark by setting the spark.dynamicallocation.enabled parameter to true. Web after the executor is idle for spark.dynamicallocation.executoridletimeout seconds, it will be released.

Enable Dynamic Resource Allocation Using Spark.

Resource allocation is an important aspect during the execution of any spark job. And only the number of. My question is regarding preemption. Web as per the spark documentation, spark.dynamicallocation.executorallocationratio does the following:

When Dynamic Allocation Is Enabled, Minimum Number Of Executors To Keep Alive While The Application Is Running.

If not configured correctly, a spark job can consume entire cluster resources. So your last 2 lines have no effect. Data flow helps the work. Web if the executor idle threshold is reached and it has cached data, then it has to exceed the cache data idle timeout ( spark.dynamicallocation.cachedexecutoridletimeout) and.

The One Which Contains Cache Data Will Not Be Removed.

Spark.shuffle.service.enabled = true and, optionally, configure spark.shuffle.service.port. As soon as the sparkcontext is created with properties, you can't change it like you did. Web how to start. Web dynamic allocation (of executors) (aka elastic scaling) is a spark feature that allows for adding or removing spark executors dynamically to match the workload.

This Can Be Done As Follows:.

Web in this mode, each spark application still has a fixed and independent memory allocation (set by spark.executor.memory ), but when the application is not running tasks on a. If dynamic allocation is enabled and an executor which has cached data blocks has been idle for more than this. Web spark.dynamicallocation.executorallocationratio=1 (default) means that spark will try to allocate p executors = 1.0 * n tasks / t cores to process n pending. Web dynamic allocation is a feature in apache spark that allows for automatic adjustment of the number of executors allocated to an application.

Web how to start. And only the number of. Web in this mode, each spark application still has a fixed and independent memory allocation (set by spark.executor.memory ), but when the application is not running tasks on a. Now to start with dynamic resource allocation in spark we need to do the following two tasks: If dynamic allocation is enabled and an executor which has cached data blocks has been idle for more than this.